Uddrag fra analyser fra Bernstein/ Zerohedge:

The Mag5s (NVDA + its top 4 customers, i.e. Microsoft, Google, Amazon, Meta) have contributed approximately 700 points to the S&P 500 over the last 2 years. In other words, the S&P 500 excluding the Mag-5s would be 12% lower today. Nvidia alone has contributed 4% to the performance of the S&P 500. This is what we find to be the ‘American exceptionalism’ premium on the S&P 500. So anything that threatens Nvidia’s business model – and the “American exceptionalism” theme in the market – is a catalyst for immediately investor panic.

Today’s panic sell-off across the market, but especially in AI names, is focused on the view that China’s DeepSeek AI model rollouts show AI products can be created at a much cheaper cost than what the US hyperscalers are working on.

For those who missed it, late last week a set of new open-source AI models from Chinese company DeepSeek took the investment community (and Twitter/X) by storm. And according to the many hot takes we saw the implications range anywhere from “That’s really interesting” to “This is the death-knell of the AI infrastructure complex as we know it.”

But while we have heard and highlighted lots of bearish arguments why the AI bubble has burst, some are not only far less skeptical but see even more bullish signs in today’s news. One among them is Bernstein analyst Stacy Rasgon who in a note today (available to pro subscribers) writes that he spent a good portion of the weekend going through DeepSeek’s (surprisingly detailed) papers, and while he is still digging in, he wanted to put some thoughts on paper:

- DeepSeek DID NOT “build OpenAI for $5M”;

- The models look fantastic but they are not miracles; and

- The resulting Twitterverse panic over the weekend seems overblown.

Digging some more into in each of these arguments.

“Did DeepSeek really “build OpenAI for $5M?” Bernstein asks and answers “Of course not…”: there are actually two model families in discussion. The first family is DeepSeek-V3, a Mixture-of-Experts (MoE) large language model which, through a number of optimizations and clever techniques can provide similar or better performance vs other large foundational models but requires a small fraction of the compute resources to train. DeepSeek actually used a cluster of 2048 NVIDIA H800 GPUs training for ~2 months (a total of -2.7M GPU hours for pre-training and -2.8M GPU hours including post-training). The oft-quoted “$5M” number is calculated by assuming a $2/GPU hour rental price for this infrastructure which is fine, but not really what they did, and does not include all the other costs associated with prior research and experiments on architectures, algorithms, or data. The second family is DeepSeek R1, which uses Reinforcement Learning (RL) and other innovations applied to the V3 base model to greatly improve performance in reasoning, competing favorably with OpenAI’s o1 reasoning model and others (it is this model that seems to be causing most of the angst as a result). DeepSeek’s R1 paper did not quantify the additional resources that were required to develop the R1 model (presumably they were substantial as well).

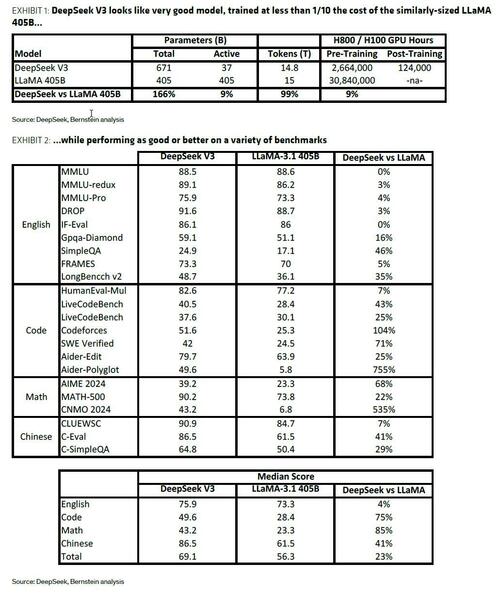

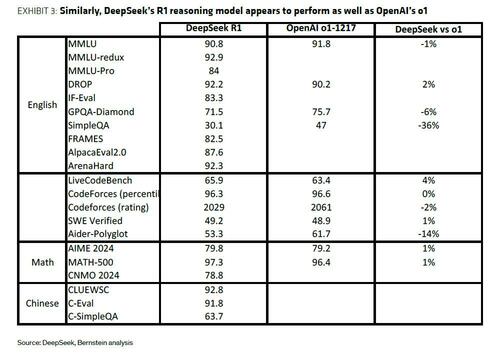

But are DeepSeek’s models good? Absolutely – V3 utilizes a Mixture-of-Experts model (an architecture that combines a number of smaller models working together) with 671B total parameters, and with 37B active at any one time. This architecture is coupled with a number of other innovations including Multi-Head Latent Attention (MHLA, which substantially reduces required cache sizes and memory usage), mixed-precision training using FP8 computation (with lower-precision enabling better performance), an optimized memory footprint, and a post-training phase among others. And the model looks really good, in fact it performs as good or better on numerous language, coding, and math benchmarks than other large models while requiring a fraction of the compute resources to train. For example, V3 required -2.7M GPU hours (~2 months on DeepSeek’s cluster of 2,048 H800 GPUs) to pre-train, only ~9% of the compute required to pre-train the open- source similarly-sized LLaMA 405B model (Exhibit 1) while ultimately producing as good or (in most cases) better performance on a variety of benchmarks (Exhibit 2).

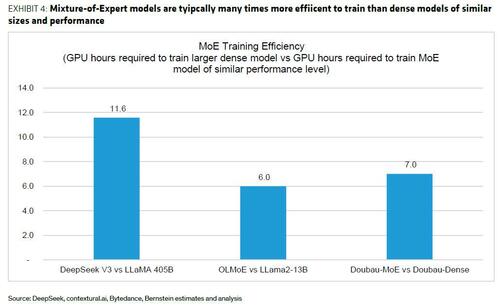

And DeepSeek R1 (reasoning) performs roughly on par with OpenAI’s o1 model (Exhibit 3).

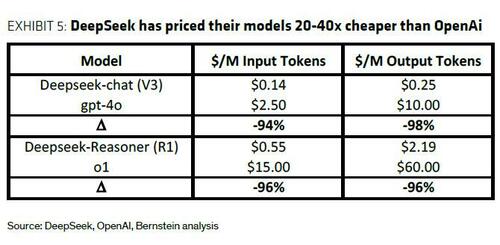

But should the relative efficiency of V3 be surprising? As an MoE model we don’t really think so…The point of the mixture-of-expert (MoE) architecture is to significantly reduce cost to train and run, given that only a portion of the parameter set is active at any one time (for example, when training V3 only 37B out of 671B parameters get updated for any one token, vs dense models where all parameters get updated). A survey of other MoE comparisons suggests typical efficiencies on the order of 3-7x vs similarly-sized dense models of similar performance; V3 looks even better than this (>1 Ox), likely given some of the other innovations in the model the company has brought to bear but the idea that this is something completely revolutionary seems a bit overblown (Exhibit 4), and not really worthy of the hysteria that has taken over the Twitterverse over the last several days.

So why the panic?

To Bernstein it seems to be a combination of:

- A fundamental misunderstanding over the “$5M” number,

- DeepSeek’s deployment of smaller models “distilled” from the larger R1, and

- DeepSeek’s actual pricing to use the models, which is admittedly far below what OpenAI is asking.

As to the first, Bernstein’s main conclusion is that it is categorically false that “China duplicated OpenAI for $5M” (as already noted the V3 model, while outstanding, does not appear to us to be using anything revolutionary or unknown). The second point is interesting however. “Distillation” is a process to equip smaller models with the abilities of larger ones, by transferring the learnings of a larger “teacher” model to a smaller “student” model. DeepSeek used R1 as a teacher to generate data, used to fine-tune a number of smaller models (based on open-sourced LLaMA 8B and 70B, and Owen 1.5, 7,14, and 32B models) that performed favorably compared to competing models like OpenAI’s o1-mini, demonstrating the potential and viability of the approach. As to the third point however, it is absolutely true that DeepSeek’s pricing blows away anything from the competition, with the company pricing their models anywhere from 20-40x cheaper than equivalent models from OpenAI (Exhibit 5). Of course, we do not know DeepSeek’s economics around these (and the models themselves are open and available to anyone that wants to work with them, for free) but the whole thing brings up some very interesting questions about the role and viability of proprietary vs open-source efforts that are probably worth doing more work on…

What are the implications? Bernstein’s initial reaction does not include panic, and in fact is far from it. If we acknowledge that DeepSeek may have reduced costs of achieving equivalent model performance by, say, 10x, we also note that current model cost trajectories are increasing by about that much every year anyway (the infamous “scaling laws…”) which can’t continue forever. In that context, we NEED innovations like this (MoE, distillation, mixed precision etc) if AI is to continue progressing.

And for those looking for AI adoption, the Bernstein analysts say they are “firm believers in the Jevons paradox” (i.e. that efficiency gains generate a net increase in demand), and believe any new compute capacity unlocked is far more likely to get absorbed due to usage and demand increase vs impacting long term spending outlook at this point, as compute needs are not anywhere close to reaching their limit in AI.

Finally, it is a stretch to think the innovations being deployed by DeepSeek are completely unknown by the vast number of top tier AI researchers at the world’s other numerous AI labs (it is not immediately known what the large closed labs have been using to develop and deploy their own models, but we just can’t believe that they have not considered or even perhaps used similar strategies themselves). To that end investments are still accelerating.

So even if China has provided a much cheaper AI platform, the DeepSeek newsflow comes just days after we got META substantially increasing their capex for the year (even though we are decidedly skeptical on that being validated), we algo got the Stargate announcement, and China announced trillion yuan (~$ 140B) AI spending plan.

Bottom line: we are still going to need, and get, a lot of chips, even if they are ultimately much cheaper.

Intro-pris i 3 måneder

Få unik indsigt i de vigtigste erhvervsbegivenheder og dybdegående analyser, så du som investor, rådgiver og topleder kan handle proaktivt og kapitalisere på ændringer.

- Fuld adgang til ugebrev.dk

- Nyhedsmails med daglige opdateringer

- Ingen binding

199 kr./måned

Normalpris 349 kr./måned

199 kr./md. de første tre måneder,

herefter 349 kr./md.

Allerede abonnent? Log ind her